Here’s the Secret to Spotting Bots and Fake News on Social Media

Back in the early 2000s, spotting a fake news story was as easy as looking at your browser’s address bar (you’ll notice how the web address of the above fake story, from 2001, is obviously not “BBC.com”). But in the age of social media, it’s become a lot harder to separate fact from fiction. This week, Facebook, Twitter, and Google’s parent company Alphabet testified before Congress about the role their platforms played in spreading Russian disinformation during the 2016 presidential election season. In written testimony, each company admitted that Russian troll and bot accounts, some of them linked to the Kremlin, bought US political ads and spread disinformation — AKA, fake news.

Facebook’s testimony revealed that up to 126 million users were exposed to Russian disinformation; Twitter revealed a list of thousands of Russian-linked accounts; Google stated in its testimony that at least 1,000 Russian-linked videos had been published to YouTube, and Russians purchased thousands of dollars in ads from Google. But what some of those ads actually looked like might surprise you.

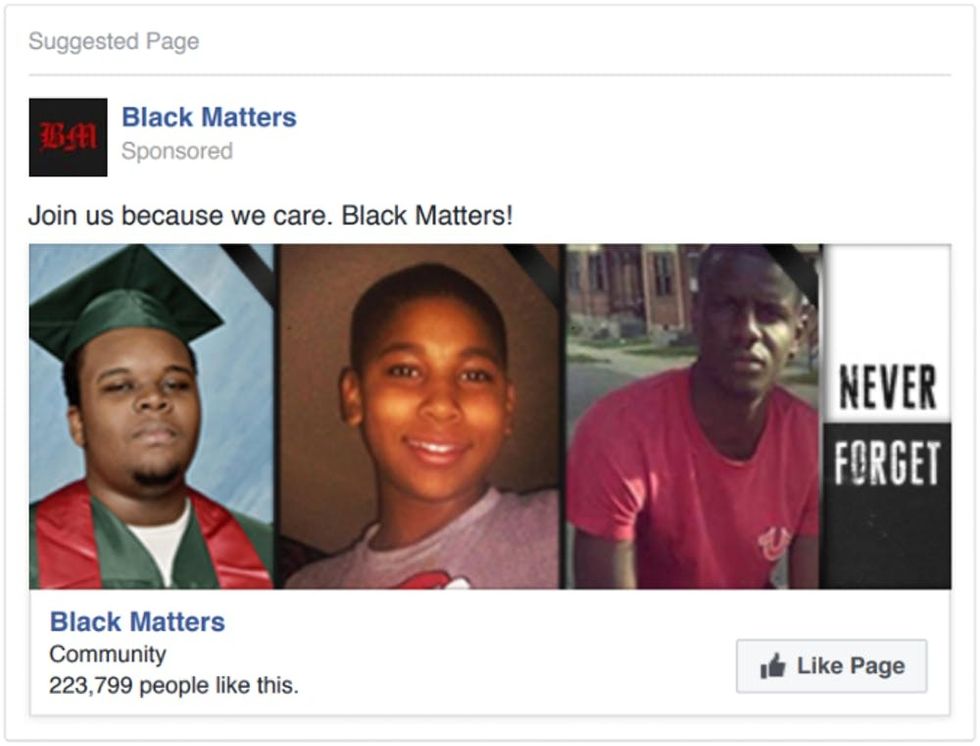

Here are a couple examples of ads and events posted to Facebook that turned out to be part of a Russian disinformation campaign aimed at playing with the emotions of voters:

They look pretty convincing, right?

As far as Democrats in Congress are concerned, there’s still more work to be done in terms of figuring out exactly how and why this happened. But as far as preventing the spread of false and divisive information in the future, Ben Nimmo, the Information Defense Fellow with the Atlantic Council’s Digital Forensic Research Lab, tells Brit + Co that Congress and the tech companies themselves can’t do all that much about it.

“It’s up to us to learn how to identify a bot or troll,” Nimmo says, “and to identify when someone is trying to trigger you emotionally” with disinformation and fake stories.

There’s a lot of information coming at us at pretty much all times on the internet, so thinking about how to keep up with and process real news while also being on the lookout for the fake and harmful stuff may sound overwhelming. But with just a little bit of information about what bots and trolls look like, and some simple internet sleuthing skills, it’s relatively easy to be on guard.

Here are some best practices for spotting accounts that are spreading disinformation so you can notice them, report them, and definitely not share any of their posts.

Nimmo says that Twitter users can easily use information about other accounts to determine whether or not it’s a bot. That’s because Twitter gives users a lot of data about other users: You can not only see someone else’s own posts and re-tweets, but also their likes, how many tweets they have sent in total, when their account was made, and so on. This information can give you everything you need to tell whether an account is legit, as long as you know where to look.

A good place to start is to see just how much an account is tweeting. According to Nimmo, even the most active, real human tweeter won’t typically send more than a maximum of 72 tweets a day (that’s an average of six tweets an hour for 12 hours… so, a lot of tweets!). Anything more than that a day could be cause for suspicion, especially if their tweets are all, or mostly, retweets — a classic sign, according to Nimmo. A bot can send out hundreds of tweets or retweets a day, so if an account is racking up huge numbers of tweets on a daily basis, be wary.

Also be suspicious if an account doesn’t post or share any personal information. Check out their avatar: Is it a picture of a real person? Is there a picture at all? What about a banner photo: Do they have one? Is their username a series of random numbers and letters, or is it a real name? The absence of any personal information alone doesn’t necessarily spell bot, but if the account also never seems to share anything other than emotionally charged political posts without ever saying anything about their own life, it’s a red flag. It’s doubly a red flag if the account is also tweeting hundreds of times a day.

The avatar photo can also be a giveaway, and with a little bit of internet detective work, it’s easy to find out if a photo is a fake. Nimmo says that often times, bots will use photos of very stereotypically beautiful women. To check out whether or not a photo is the real deal, simply perform a reverse image search. When browsing in Chrome, simply right-click on the image and click “Search Google for image.” If the results that come up are associated with a bunch of different people, or if it’s a photo of someone who isn’t otherwise involved in political messaging, that’s another red flag.

Nimmo explains that because Facebook doesn’t have the same information about an account as easily available as Twitter, it can be a bit trickier to spot the fakes. For example: Twitter displays how many total tweets a person has sent, whereas Facebook doesn’t show total posts. But a lot of the same methods used to spot bots on Twitter can also be applied to Facebook, according to Nimmo.

On Facebook, users will want to pay attention to what, if any, personal information is being shared by a Facebook account that posts a lot of incendiary political content. Nimmo says that normal humans on Facebook will share a variety of posts, including things like recipes and photos of cute animals (you know the drill!). If there’s no personal or “normal” posts of any kind, just a lot of political material that’s designed to provoke a reaction, that’s a red flag that you’re dealing with a bot.

And of course, it would be a good idea to reverse image search the avatar photos of suspicious-looking Facebook accounts too.

Most of the Russian meddling on Google was found on YouTube (which is a Google property): Business Insider reports that more than 1,000 videos linked to the Kremlin were posted to the site leading up to the election. The Russian “troll farm” Internet Research Agency also bought $4,700 worth of ads from Google during the election season.

When searching Google for news, Nimmo says it’s vital to check other sources before believing or sharing a political story that elicits a strong emotional response. If you Google search a phrase and see news stories that immediately make you feel afraid or angry, check the source: Is it a site you recognize, or is the name unfamiliar? Is it a reputable news organization such as the Guardian or the New York Times? Nimmo also advises scrolling through a few pages of news stories to see if many outlets are reporting a story.

If it’s a real story and it’s something major, you can bank on most mainstream, reputable sites reporting on it. If just a couple of sites are reporting the story, and especially if they’re making claims that the mainstream press is “covering it up,” it’s pretty likely that the story isn’t based on real facts.

What do you think? Tell us on Twitter @BritandCo.

(Photos via the US House of Representatives Democrats Permanent Select Committee on Intelligence + Getty)